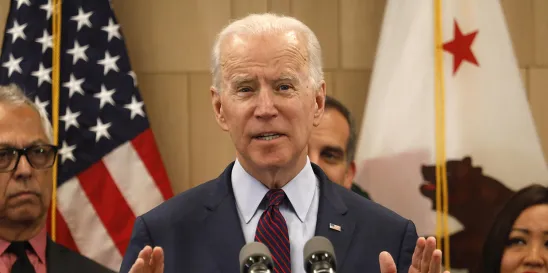

On Oct. 30, 2023, President Biden issued an Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (AI) that establishes new standards for AI safety and security. The executive order is part of a comprehensive strategy for “responsible innovation,” one that will help Americans seize the promise that AI has to offer while suppressing the risks that come with it (1). Specific concerns that this executive order seeks to address include fraud, discrimination, bias, disinformation, workers’ displacement and disempowerment, stifled competition, and risks to national security. Notably, the executive order is drafted to help support the secure and trustworthy use and development of AI through eight guiding principles. Among these eight principles are the following: (i) AI must be safe and secure; (ii) promoting responsible innovation, competition, and collaboration will allow the United States to lead in AI and unlock the technology’s potential to solve some of society’s most difficult challenges; (iii) the responsible development and use of AI require a commitment to supporting American workers; and (iv) AI policies must be consistent with [the] administration’s dedication to advancing equity and civil rights.

Key points of the executive order include the following specific goals:

- The use of watermarking tools to clearly label AI-generated content;

- The development of a talented AI workforce and of agencies to protect consumers from risks inherent to AI;

- The minimization of bias in the use of AI tools;

- The creation of guardrails to protect consumers’ data privacy; and

- The promotion of AI tools to help solve global challenges.

Specifically, the executive order directs a majority of federal agencies to address AI’s specific implications for their sectors. The secretary of state and secretary of homeland security are directed in the executive order to streamline visa processes for skilled AI professionals, students and researchers, the U.S. Patent and Trademark Office is directed to provide AI guidance, and the U.S. Copyright Office is directed to recommend additional protection for AI-produced work. These actions taken by the federal agencies will also likely impact non-government entities as well, given that agencies will seek to impose various requirements, such as reporting obligations for technology providers, through contractual obligations or the invocation of statutory powers under the Defense Production Act for the national defense and the protection of critical infrastructure.

The U.S. Department of Commerce is directed to propose extensive reporting requirements for infrastructure as a service (IaaS) providers for cyber-related sanctions purposes whenever a foreign person interacts with training large AI models that could be used in malicious cyber-enabled activity, and identity verification requirements on foreign persons obtaining IaaS accounts through foreign resellers (2). The order also requires AI companies to report testing of their models to ensure safety. This includes reporting requirements for models trained using substantial computing power to the extent large amounts of compounding power are acquired or as such thresholds are re-defined by the secretary of commerce; Gensyn Network Head of Operations Jeff Amico noted that such reporting requirements are “essentially public company reporting requirements for startups building large models.” (3) Mr. Amico also posted the following on the X platform: https://twitter.com/_jamico/status/1719167854325772772.

With respect to the banking industry, Biden’s AI directive may help banks make informed and safer loan and credit decisions, which in turn would support the use of AI-based financial systems. Like many others, the banking sector has been experimenting with AI, and testing different models to achieve their desired goals. Specifically, banks can capitalize on AI’s benefits in the field of fraud detection; AI is a tool practically designed to scan voluminous quantities of data and shortly thereafter to detect unusual patterns, directly supporting anti-money laundering efforts. On the consumer end, AI can be used to create a client-centric interface and mobile application experience, particularly when onboarding new customers and vendors, as well as virtual assistances and chatbots to help consumers with any questions or concerns. By leveraging AI, financial firms and banks can create a transparent and interactive experience for the consumer, while making their own day-to-day activities easier.

Unsurprisingly, AI was a hot topic of conversation at Money 20/20. As per Banking Dive’s report, executive insights from the conference revealed that while most banks have not released generative AI to the end customer, many banks, fintechs, and payment leaders are reaping in the rewards the technology has to offer by using it to train their virtual assistants. While banks and fintechs were originally focused on fighting fraud though the deployment of AI, they are now focusing on gaining a data advantage, streamlining, and accelerating financial services, and helping democratize the financial services industry. Of course, challenges still exist — whether copyright issues with the data sets or regulatory disparities with AI amidst a highly regulated industry — but, so long as firms focus on using AI responsibly, there is likely to be a big uptick in investment in AI in the financial services sphere insofar as including large language models unique to specific firms.

Given that there was no official federal legislation on AI prior to the passage of this executive order, individual federal agencies continued to address AI in the contexts in which they regulate. On July 18, 2023, the Commodity Futures Trading Commission (CFTC) Commissioner Christy Goldsmith Romero spoke on the concept of “responsible AI,” the tremendous benefits and growing concerns about harmful outcomes that can arise from the use of AI, and the CFTC’s mission in promoting responsible innovation at the Technology Advisory Committee meeting on responsible AI in financial services, decentralized autonomous organizations (DAOs), and other decentralized finance (DeFi), and cyber resilience (4). Commissioner Romero stated that “[t]he CFTC has an important mission that includes promoting responsible innovation . . .[w]hen it comes to our regulated entities, responsible AI raises questions related to an organization’s responsibilities when it comes to AI, including governance — how decisions are being made and who will make those decisions . . .[a]s a long-time enforcement attorney, surveillance and data analysis immediately come to mind, but there could be many others.” (5)

A week later, on July 26, 2023, the U.S. Securities and Exchange Commission (SEC) proposed broad new rules under the Securities Exchange Act of 1934 (Exchange Act) and the Investment Advisers Act of 1940 (Advisers Act) to address conflicts of interest that the agency believes are posed by the use of AI and other types of analytical technologies by broker-dealers and investment advisers (6). Importantly, however, the SEC’s 3-2 vote on the proposed rules reflects a split among the SEC commissioners as to whether these proposed rules are the right approach. Specifically, these rules were intended to prevent a broker-dealer or an investment adviser from using “predictive data analytics” (PDA) and similar AI-based technologies in a manner that results in the firm prioritizing its own interests above those of its investors, particularly when such technologies are used in the context of engaging or communicating with its investors to optimize the firm’s revenue or to generate behavioral prompts or social engineering to change investor behavior to the firm’s advantage.

To no surprise, there has been backlash since the issuance of the executive order, particularly amongst researchers and open-source developers who take the position that regulation should not apply to foundational models and research but rather to applications of AI; the executive order also seems to have had a stifling effect on certain technologies and AI-related tokens (7). This executive order, however, marks the first formidable federal action towards regulating AI in the U.S. Ultimately, the execution of the Biden vision will depend on the actions of the executive branch agencies which could find themselves challenged in courts, and the legislative branch which is working on its own plans for regulating AI.

Additional Authors: Daniel C. Lumm, Joseph "Joe" Damon, Leslie Green, Jackson Parese, Marc Jenkins, Michael J. Halaiko, Alexandra P. Moylan

(1) Id.

(2) Id.

(3) See Stephen Alpher, AI-Related Tokens Stumble After White House Executive Order, COINDESK (Oct. 31, 2023), https://www.msn.com/en-us/news/technology/ai-related-tokens-stumble-after-white-house-executive-order/ar-AA1jaILa.

(4) CFTC, Public Statements & Remarks, Opening Statement of Commissioner Christy Goldsmith Romero at the Technology Advisory Committee Meeting on Responsible AI in Financial Services, DAOs, and Other DeFi & Cyber Resilience (July 18, 2023), https://www.cftc.gov/PressRoom/SpeechesTestimony/romerostatement071823.

(5) Id.

(6) SEC, 17 CFR Parts 240 and 275 [Release Nos. 34-97990; IA-6353; File No. S7-12-23] https://www.sec.gov/files/rules/proposed/2023/34-97990.pdf; See https://www.sec.gov/news/press-release/2023-140.

(7) See K L Krithika, Biden’s AI Executive Order Faces Backlash, GOA INSTITUTE F MANAGEMENT (Oct. 31, 2023), https://analyticsindiamag.com/bidens-ai-executive-order-faces-backlash/.

/>i

/>i