A couple of weeks ago we asked readers of this blog to answer a couple of questions on their organisation’s use of (generative) artificial intelligence, and we promised to circle back with the results. So, drum roll, the results are now in.

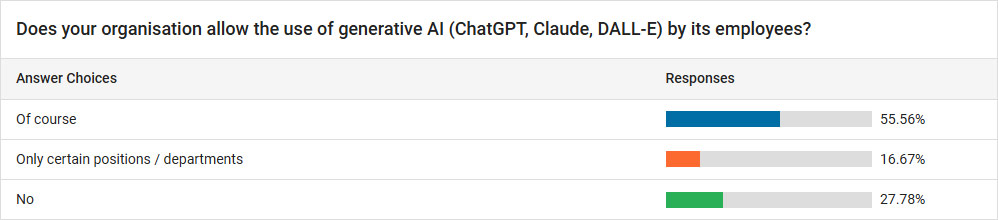

1. In our first question, we wanted to know whether your organisation allows its employees to use generative AI, such as ChatGPT, Claude or DALL-E.

While a modest majority allows it, almost 28% of respondents have indicated that use of genAI is still forbidden, and another 17% allow it only for certain positions or departments.

This first question was the logical build-up to the second:

2. If the use of genAI is allowed to any extent, does that mean the organisation has a clear set of rules around such use?

A solid 50% of respondents have effectively introduced guidelines in this respect. A further 22% are working on it. And that is indeed the sensible approach. It is important that employees know the organisation’s position on (gen)AI, if they can use it and for what, or why they cannot. They should understand the risks of using genAI inappropriately and what may be the sanction if they use it without complying with company rules.

Essential in the rules of play is transparency. Management should have a good understanding of the areas within the organisation where genAI is being used. In particular when genAI is being used for research purposes or in areas where IP infringements may be a concern, it is essential that employees are transparent about the help they have had from their algorithmic co-worker. The risk of “hallucinations” in genAI is still very real, and knowing that work product has come about with the help of genAI should make a manager look at it with different and more attentive eyes.

Please also note in this respect that under the EU AI Act, as from last weekend, providers and deployers of AI systems must ensure that their employees and contractors using AI have an adequate degree of AI literacy, for example by implementing training. The required level of AI literacy is determined “taking into account [the employees’] technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used”.

Since we had anticipated there would be quite a number of organisations that still prohibit the use of genAI, we had also asked:

3. What was the main driver for companies’ prohibition of the use of genAI in the workplace?

The response was not surprising. Organisations are mostly concerned about the risk of errors in AI’s responses and of its inadvertently leaking their confidential information.

While this fear of leaks is completely justified for free applications, such as the free version of ChatGPT and popular search engines such as Bing and Google that are increasingly powered by Large Language Models (LLMs), this fear is largely unjustified for the paid versions. Their business model depends on trust, and they guarantee that in the paid version of their LLM, no data will ever get reused for training purposes. To our knowledge, there have been no incidents and not even the slightest indications that the large vendors would disregard their promises in this regard.

This leads to the somewhat ironic conclusion that prohibiting the use of genAI by your employees may be more likely to realise the risks that the company fears, as employees may then be tempted to use a free, less safe version of the application on their personal devices instead.

4. In which areas of HR do our respondents use AI?

Where respondents indicated other areas of use in HR, they mentioned intelligence gathering, improvement of communication and specific areas of recruitment, such as writing job descriptions and skills testing.

5. Does your organisations plan to increase its use of AI in the next twelve months?,

The narrow majority responded that this would not be the case:

Those respondents which anticipated increased use of AI considered that there will be an increased use generally, in all areas. Specific predictions for increased use are in areas such as the use of HR bots for benefits enquiries, and forecasting.

6. If your organisation does not currently employ AI in HR, why not?

The response to this question is probably the most surprising: a majority of organisations which are not yet using AI in HR are not reluctant for philosophical, technical or employment relations reasons, but have simply not yet got round to it. It is expected that the next 12-18 months will see an important increase in usage overall, which will also lead to a similar uptick in the HR sector.

We ended our survey with perhaps the most delicate question:

7. Do you expect that in the next 12 to 24 months, there will be redundancies within your organisation due to increased use of AI?

For the large majority of organisations, this is not the case.

To this same question, ChatGPT itself responded the following:

The use of AI in businesses can indeed lead to job loss in certain sectors, especially in roles that rely heavily on routine, repetitive tasks. For example, administrative roles, customer service, or even certain manufacturing and warehouse jobs could be replaced by AI, as it can often perform these tasks more efficiently and cost-effectively. On the other hand, AI can also create new jobs, especially in fields like data analysis, machine learning, AI development, and management. Businesses will likely focus more on roles that require creativity and strategy, areas where human input is essential, like decision-making and improving customer relationships. The key will be how companies combine the use of AI with upskilling their workforce, enabling employees to adapt to the changing job landscape.

As is often – though certainly not always – the case, ChatGPT is not wrong about this. We didn’t ask it specifically about its impact on staffing levels in HR, but we think that considerable comfort can be taken from its reference to the continued sanctity of roles where “human input is essential”. It is a very far-off future where many of the more sensitive and difficult aspects of HR management will be accepted as adequately discharged by an algorithm.

/>i

/>i