This is part Thirteen of the continuing series on two-filter document culling. (Yes, we are going for a world record on longest law blog series.:) Document culling is very important to successful, economical document review. Please read part twelve before this one.

Limiting Final Manual Review

In some cases you can, with client permission (often insistence), dispense with attorney review of all or near all of the documents in the upper half. You might, for instance, stop after the manual review has attained a well-defined and stable ranking structure. For example, you only have reviewed 10% of the probable relevant documents (top half of the diagram), but decide to produce the other 90% of the probable relevant documents without attorney eyes ever looking at them. There are, of course, obvious problems with privilege and confidentiality to such a strategy. Still, in some cases, where appropriate clawback and other confidentiality orders are in place, the client may want to risk disclosure of secrets to save the costs of final manual review. This should, however, only be done with full disclosure and understanding of the considerable risks involved. We do not recommend this bypass, but in some rare occasions it makes sense.

In some cases you can, with client permission (often insistence), dispense with attorney review of all or near all of the documents in the upper half. You might, for instance, stop after the manual review has attained a well-defined and stable ranking structure. For example, you only have reviewed 10% of the probable relevant documents (top half of the diagram), but decide to produce the other 90% of the probable relevant documents without attorney eyes ever looking at them. There are, of course, obvious problems with privilege and confidentiality to such a strategy. Still, in some cases, where appropriate clawback and other confidentiality orders are in place, the client may want to risk disclosure of secrets to save the costs of final manual review. This should, however, only be done with full disclosure and understanding of the considerable risks involved. We do not recommend this bypass, but in some rare occasions it makes sense.

In such productions there are also dangers of imprecision where a significant percentage of irrelevant documents are included. This in turn raises concerns that an adversarial view of the other documents could engender other suits, even if there is some agreement for return of irrelevant. Once the bell has been rung, privileged or hot, it cannot be un-rung.

Case Example of Production With No Final Manual Review

In spite of the dangers of the unringable bell, the allure of extreme cost savings can be strong to some clients in some cases. For instance, I did one experiment using multimodal CAL with no final review at all, where I still attained fairly high recall, and the cost per document was only seven cents. I did all of the review myself acting as the sole SME. The visualization of this project would look like the below figure.

Note that if the SME review pool were drawn to scale according to number of documents read, then, in most cases, it would be much smaller than shown. In the review where I brought the cost down to $0.07 per document I started with a document pool of about 1.7 Million, and ended with a production of about 400,000. The SME review pool in the middle was only 3,400 documents.

As far as legal search projects go it was an unusually high prevalence, and thus the production of 400,000 documents was very large. Four hundred thousand was the number of documents ranked with a 50% or higher probable prevalence when I stopped the training. I only personally reviewed about 3,400 documents during the SME review. I then went on to review another 1,745 documents after I decided to stop training, but did so only for quality assurance purposes and using a random sample. To be clear, I worked alone, and no one other than me reviewed any documents. This was an Army of One type project.

As far as legal search projects go it was an unusually high prevalence, and thus the production of 400,000 documents was very large. Four hundred thousand was the number of documents ranked with a 50% or higher probable prevalence when I stopped the training. I only personally reviewed about 3,400 documents during the SME review. I then went on to review another 1,745 documents after I decided to stop training, but did so only for quality assurance purposes and using a random sample. To be clear, I worked alone, and no one other than me reviewed any documents. This was an Army of One type project.

Although I only personally reviewed 3,400 documents for training, I actually instructed the machine to train on many more documents than that. I just selected them for training without actually reviewing them first. I did so on the basis of ranking and judgmental sampling of the ranked categories. It was somewhat risky, but it did speed up the process considerably, and in the end worked out very well. I later found out that other information scientists often use this technique as well. See eg.Evaluation of Machine-Learning Protocols for Technology-Assisted Review in Electronic Discovery, SIGIR’14, July 6–11, 2014, at pg. 9.

My goal in this project was recall, not precision, nor even F1, and I was careful not to over-train on irrelevance. The requesting party was much more concerned with recall than precision, especially since the relevancy standard here was so loose. (Precision was still important, and was attained too. Indeed, there were no complaints about that.) In situations like that the slight over-inclusion of relevant training documents is not terribly risky, especially if you check out your decisions with careful judgmental sampling, and quasi-random sampling.

I accomplished this review in two weeks, spending 65 hours on the project. Interestingly, my time broke down into 46 hours of actual document review time, plus another 19 hours of analysis. Yes, about one hour of thinking and measuring for every two and a half hours of review. If you want the secret of my success, that is it.

I stopped after 65 hours, and two weeks of calendar time, primarily because I ran out of time. I had a deadline to meet and I met it. I am not sure how much longer I would have had to continue the training before the training fully stabilized in the traditional sense. I doubt it would have been more than another two or three rounds; four or five more rounds at most.

Typically I have the luxury to keep training in a large project like this until I no longer find any significant new relevant document types, and do not see any significant changes in document rankings. I did not think at the time that my culling out of irrelevant documents had been ideal, but I was confident it was good, and certainly reasonable. (I had not yet uncovered my ideal upside down champagne glass shape visualization.) I saw a slow down in probability shifts, and thought I was close to the end.

I had completed a total of sixteen rounds of training by that time. I think I could have improved the recall somewhat had I done a few more rounds of training, and spent more time looking at the mid-ranked documents (40%-60% probable relevant). The precision would have improved somewhat too, but I did not have the time. I am also sure I could have improved the identification of privileged documents, as I had only trained for that in the last three rounds. (It would have been a partial waste of time to do that training from the beginning.)

The sampling I did after the decision to stop suggested that I had exceeded my recall goals, but still, the project was much more rushed than I would have liked. I was also comforted by the fact that the elusion sample test at the end passed my accept on zero error quality assurance test. I did not find any hot documents. For those reasons (plus great weariness with the whole project), I decided not to pull some all-nighters to run a few more rounds of training. Instead, I went ahead and completed my report, added graphics and more analysis, and made my production with a few hours to spare.

A scientist hired after the production did some post-hoc testing that confirmed an approximate 95% confidence level recall achievement of between 83% to 94%. My work also confirmed all subsequent challenges. I am not at liberty to disclose further details.

In post hoc analysis I found that the probability distribution was close to the ideal shape that I now know to look for. The below diagram represents an approximate depiction of the ranking distribution of the 1.7 Million documents at the end of the project. The 400,000 documents produced (obviously I am rounding off all these numbers) were 50% plus, and 1,300,000 not produced were less than 50%. Of the 1,300,000 Negatives, 480,000 documents were ranked with only 1% or less probable relevance. On the other end, the high side, 245,000 documents had a probable relevance ranking of 99% or more. There were another 155,000 documents with a ranking between 99% and 50% probable relevant. Finally, there were 820,000 documents ranked between 49% and 01% probable relevant.

The file review speed here realized of about 35,000 files per hour, and extremely low cost of about $0.07 per document, would not have been possible without the client’s agreement to forgo full document review of the 400,000 documents produced. A group of contract lawyers could have been brought in for second pass review, but that would have greatly increased the cost, even assuming a billing rate for them of only $50 per hour, which was 1/10th my rate at the time (it is now much higher.)

The client here was comfortable with reliance on confidentiality agreements for reasons that I cannot disclose. In most cases litigants are not, and insist on eyes on review of every document produced. I well understand this, and in today’s harsh world of hard ball litigation it is usually prudent to do so, clawback or no.

Another reason the review was so cheap and fast in this project is because there were very little opposing counsel transactional costs involved, and everyone was hands off. I just did my thing, on my own, and with no interference. I did not have to talk to anybody; just read a few guidance memorandums. My task was to find the relevant documents, make the production, and prepare a detailed report – 41 pages, including diagrams – that described my review. Someone else prepared a privilege log for the 2,500 documents withheld on the basis of privilege.

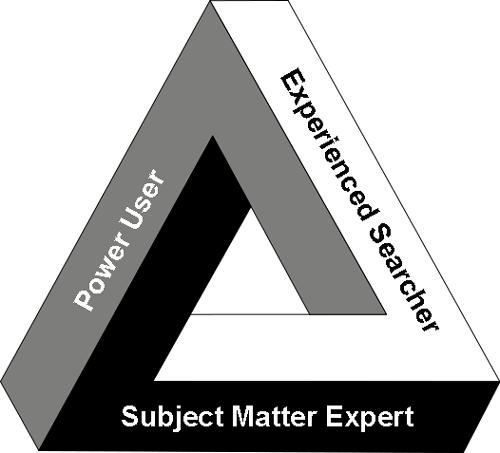

I am proud of what I was able to accomplish with the two-filter multimodal methods, especially as it was subject to the mentioned post-review analysis and recall validation. But, as mentioned, I would not want to do it again. Working alone like that was very challenging and demanding. Further, it was only possible at all because I happened to be a subject matter expert of the type of legal dispute involved. There are only a few fields where I am competent to act alone as an SME. Moreover, virtually no legal SMEs are also experienced ESI searchers and software power users. In fact, most legal SMEs are technophobes. I have even had to print out key documents to paper to work with some of them.

Even if I have adequate SME abilities on a legal dispute, I now prefer to do a small team approach, rather than a solo approach. I now prefer to have one of two attorneys assisting me on the document reading, and a couple more assisting me as SMEs. In fact, I can act as the conductor of a predictive coding project where I have very little or no subject matter expertise at all. That is not uncommon. I just work as the software and methodology expert; the Experienced Searcher.

Even if I have adequate SME abilities on a legal dispute, I now prefer to do a small team approach, rather than a solo approach. I now prefer to have one of two attorneys assisting me on the document reading, and a couple more assisting me as SMEs. In fact, I can act as the conductor of a predictive coding project where I have very little or no subject matter expertise at all. That is not uncommon. I just work as the software and methodology expert; the Experienced Searcher.

Recently I worked on a project where I did not even speak the language used in most of the documents. I could not read most of them, even if I tried. I just worked on procedure and numbers alone. Others on the team got their hands in the digital mud and reported to me and the SMEs. This works fine if you have good bilingual SMEs and contract reviewers doing most of the hands-on work.

To be continued …. (final installment comes next!)

/>i

/>i